Autonomous Optimization for Microgrid ESS Scheduling

This case study demonstrates the use of predictive scheduling and reinforcement learning to optimize ESS operations under uncertain microgrid conditions, resulting in a self-adaptive system capable of automatically responding to environmental changes.

Challenges

Uncertainty in Demand and Supply Forecasting

ESS scheduling must take into account uncertainties in both demand and supply within microgrid environments

Need for Cost Optimization under Varying Operation Modes

Optimal ESS operation should minimize electricity costs during grid-connected operation and reduce diesel fuel consumption during islanded operation with diesel generators

Requirement for Continuous Optimization and Environmental Adaptability

The system must support continuous optimization without requiring structural modifications, while remaining adaptable to external changes such as tariff adjustments and operational policy updates

Solutions

Weather-Based Predictive Scheduling

Generate baseline ESS schedules using day-ahead (D-1) weather forecasts to predict load and renewable generation, with hourly updates incorporating the latest forecast data

Real-Time Deviation Tracking and Rescheduling

Recalculate ESS schedules every 15 minutes by analyzing real-time deviations between forecasted and actual values for both load and renewable energy generation

Reinforcement Learning-Based Autonomous Optimization

Apply reinforcement learning to enable autonomous adaptation to external changes, such as tariff adjustments, without requiring manual intervention from experts

Applications & Benefits

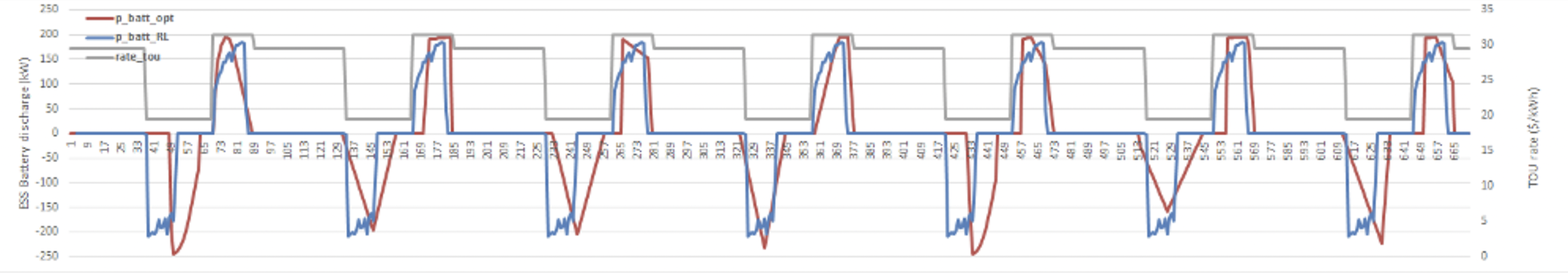

“The schedule generated by the reinforcement learning–based solution closely matched the outputs of the optimization engine, significantly improving ESS operational stability and efficiency.”

- Reinforcement learning–based schedules achieved results nearly identical to those of traditional optimization engines

- Demonstrated the capacity to infer and operate in accordance with the characteristics of TOU tariffs without being explicitly provided with tariff information, highlighting its potential for autonomous learning and adaptability to future changes in pricing schemes or alternative optimization objectives, such as improving diesel generator efficiency.